Blog Post: Building a Scalable, Serverless Platform with AWS – A Solo Operator’s Journey

As a solo operator running a content platform with AI-driven features, I’ve learned the importance of building an architecture that’s scalable, compliant, and manageable without a large team. Today, I’m excited to share the architecture behind appA, a serverless platform hosted in AWS us-west-2, designed to support user authentication, content moderation, payment processing, and more—all while prioritizing regulatory compliance in an ever-evolving AI landscape. Lawmakers are struggling to keep up with rapidly changing regulations, especially around AI, and appA leads with compliance to ensure we’re prepared for the future. Here’s a deep dive into the architecture, my operational strategies, and the lessons I’ve learned along the way.

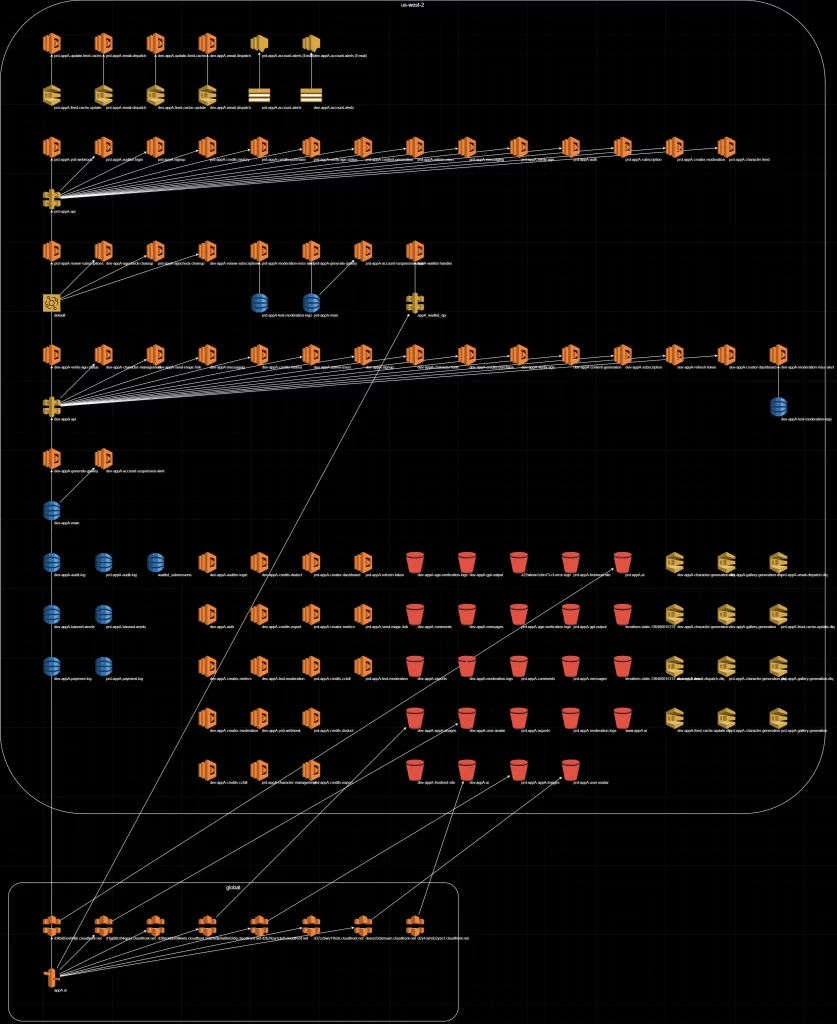

The Architecture: A Serverless Foundation on AWS

At the heart of appA is a fully serverless architecture, leveraging AWS services to ensure scalability, cost-efficiency, and minimal operational overhead. Here’s a breakdown of the key components:

- AWS Lambda: Over 50 Lambda functions handle specific tasks like user authentication, AI image generation, and content moderation. This granularity allows for isolated debugging and independent scaling, making it easier to manage a complex system as a solo operator.

- Amazon API Gateway: API gateways (prd-appA-api, dev-appA-api) serve as entry points for REST APIs, routing requests to Lambda functions for both production and development environments. A third gateway (appA_waitlist_api) handles waitlist submissions, ensuring modularity.

- Amazon SQS with DLQs: Queues like prd-appA-feed-cache-update and dev-appA-email-dispatch enable asynchronous processing, with dead-letter queues (DLQs) to capture failed messages and ensure fault tolerance.

- Amazon DynamoDB: Tables like dev-appA-main store user data, metadata, and logs, providing serverless scalability for data storage.

- Amazon S3 and CloudFront: S3 buckets such as images.prd.appA.ai and avatars.dev.appA.ai offload static assets like images, videos, and JavaScript files, while CloudFront distributions leverage CDN technology to deliver content with low latency and high availability. This setup ensures fast, global access to media-heavy AI-generated content, reducing load on the core application.

- Amazon EventBridge: Triggers periodic operational tasks such as renewing memberships, background maintenance processes, optimizing non-user-facing workflows.

- Frontend with React: The frontend uses React Swiper with lazy loading to optimize image loading, ensuring fast page loads even with image-heavy AI-generated content.

This architecture, visualized in the diagram below, is designed to handle the demands of a regulated workload—think payment processing compliant with major processors like Visa and Mastercard, input moderation, and AI-driven content generation—while keeping operational complexity manageable.

Operational Excellence: CI/CD and Solo Management

Running a platform like appA as a solo operator requires a disciplined approach to operations. Here’s how I manage deployments and ensure reliability:

- Terraform with Workspaces: I use Terraform with workspaces to manage dev and prd environments, applying dev.tfvars and prd.tfvars for environment-specific configurations. The AWS Lambda Terraform module streamlines the deployment of 50+ functions, creating IAM roles, log groups, and other resources in a single configuration block. This setup ensures consistency and minimizes misconfiguration risks.

- CI/CD Pipeline with Robust Validation: My CI/CD pipeline is a multi-step process designed for quality, security, and stability. It starts with code quality and security checks using SonarCloud to analyze code for bugs, vulnerabilities, and maintainability. I also use Snyk and Trivy to scan Terraform configurations for security issues, ensuring infrastructure-as-code (IaC) adheres to best practices. Additionally, Talisman scans for secrets (e.g., API keys, credentials) to prevent accidental leaks. Once these checks pass, the pipeline automates workspace switching, starting with the dev environment. I run terraform plan -var-file=dev.tfvars to review planned changes, ensuring critical resources like databases aren’t accidentally modified. After approving the plan, the pipeline applies the changes (terraform apply -var-file=dev.tfvars). If the dev deployment succeeds, the pipeline promotes to prd by running terraform plan -var-file=prd.tfvars, followed by another approval gate, and finally terraform apply -var-file=prd.tfvars. This sequential process ensures that untested changes never reach production, protecting my users.

- Targeted Logging: Each Lambda function logs to CloudWatch, making it easy to isolate issues. For example, if the /api/dashboard-feed endpoint fails (which can take up to 2,119 ms on a cold start), I can quickly check its logs without sifting through unrelated data—a huge advantage over a monolithic setup.

Compliance and Scalability: Building for the Future

appA operates in a regulatory landscape where compliance is non-negotiable, especially with payment processors like Visa and Mastercard enforcing strict standards and AI regulations evolving daily. Here’s how the architecture addresses these needs:

- Compliance Features: Lambda functions handle input moderation to ensure content meets regulatory standards, while others manage age verification and log all actions for auditing. DynamoDB and S3 store metadata and logs (e.g., logs.prd.appA.ai), providing a traceable record for compliance.

- S3 and CloudFront for Scalability: Offloading static assets (images, videos, JavaScript) to S3 with CloudFront ensures fast, global delivery via CDN technology. This setup reduces latency for users worldwide, even as traffic grows, while keeping costs minimal by leveraging S3’s pay-as-you-go pricing and CloudFront’s caching.

- Frontend Optimization: Lazy loading ensures fast page loads for image-heavy AI-generated content, maintaining a seamless user experience even with media-intensive workloads.

- Scalability Roadmap: Provisioned concurrency is on the roadmap as the platform gains traction. Cold starts are currently a minor issue but as traffic increases, Lambda instances will stay warm naturally, reducing latency.

Cost Efficiency: Minimal Spend, Maximum Impact

One of the standout benefits of this serverless architecture is its cost efficiency. Currently, the entire platform—spanning Lambda, API Gateway, SQS, DynamoDB, S3, CloudFront, and more—costs under $5 per month to run. Even with a projected 10,000 monthly users, the spend remains minimal thanks to AWS’s pay-as-you-go pricing model. S3 and CloudFront, in particular, keep costs low by efficiently handling static assets, while Lambda’s per-invocation pricing ensures I only pay for actual usage. This affordability allows me to focus on building features rather than managing infrastructure costs.

Lessons Learned as a Solo Operator

Building and managing appA has taught me several key lessons:

- Granularity Pays Off: Having 50+ Lambda functions might seem like overkill, but the isolation makes debugging a breeze. A single CloudWatch log stream per function means I can pinpoint issues quickly—something a monolith would complicate.

- Automation is Non-Negotiable: Automating CI/CD with rigorous validation (SonarCloud, Snyk, Trivy, Talisman) and approval gates ensures I never deploy untested changes to production. Stability is critical when you’re the only one on call.

- Compliance First: Building in input moderation and logging from the start has made appA compliant with payment processor standards and prepared for evolving AI regulations, saving me headaches down the road.

What’s Next for appA

As appA grows, I’m focused on a few key improvements:

- Cold Start Monitoring: I’ll track InitDuration in CloudWatch to monitor cold start impact, adding provisioned concurrency as needed.

- Dynamic Visualization: I plan to use Python’s Diagrams library to generate dynamic views of prd paths, improving operational visibility.

- Pipeline Auditability: I’ll log all deployment actions to S3 for an auditable trail, supporting compliance requirements.

Conclusion

Building appA as a solo operator has been a challenging but rewarding journey. By leveraging AWS’s serverless services, automating deployments with a robust CI/CD pipeline, and prioritizing compliance, I’ve created a platform that’s scalable, cost-efficient (under $5/month!), and manageable without a large team. Whether you’re a startup founder, DevOps engineer, or fellow solo operator, I hope this architecture inspires you to build with scalability, compliance, and efficiency in mind.

What do you think of this setup? I’d love to hear your thoughts in the comments or connect on LinkedIn to discuss serverless architectures, CI/CD best practices, or solo ops strategies!